Reliability pillar: How do you manage Service Quotas and constraints?

Table of Contents

Reliability pillar - This article is part of a series.

In the previous article of this series, we delved into the Reliability pillar structure, describing the Design Principles and the structure of the best practices.

If you missed it or need a recap, you can find it here or access it through the dropdown menu at the beginning of the page.

After two introductory articles, we finally start with the First Reliability Pillar question, which is part of Foundations:

How do you manage Service Quotas and constraints?

First of all, let’s define what Quotas and constraints are.

Service Quotas #

One characteristic of the cloud is its ability to acquire and release resources quickly, on-demand. This might give you the impression of working with limitless resources, but that’s not entirely correct, especially when working with serverless architectures.

Each AWS service has limits, known as service quotas, and they refer to the maximum number of resources or operations that an AWS account can use or perform applying either to a specified region in most cases, or globally at the account level. Service quotas are often soft limits set by AWS, meaning you can request an increase if necessary.

Why does AWS set these limits?

If service quotas are soft limits and you could request an increasing, why does AWS set them?

The reason lies in the multi-tenancy nature of the cloud: to prevent any single account from consuming an excessive amount of resources, potentially affecting the performance of other accounts in the same region.

Constraints #

In contrast to service quotas, which are set by AWS, Constraints refer to physical hardware limits. As the name suggests, these are hard limits. For example, you cannot increase the storage capacity of a physical disk unless you use a different physical disk.

Best Practices #

Now that we have clarified what service quotas and constraints are, let’s list the best practices for managing them:

- Aware of service quotas and constraints

- Manage service quotas across accounts and regions

- Accommodate fixed service quotas and constraints through architecture

- Monitor and manage quotas

- Automate quota management

- Ensure that a sufficient gap exists between the current quotas and the maximum usage to accommodate failover

Each best practice is detailed, so I won’t summarize them here. I strongly recommend reading them carefully. Instead, I’ll share some work experience and provide you with a practical solution to monitor and manage quotas that align with most of the best practices. You can find the links to each best practice documentation page in the Resource section.

💡 3 main things to keep in mind #

- Using AWS Service Quotas to check quotas and request history.

- Recognizing that some quotas and constraints can impact your architectural decisions.

- Understanding that some quotas and constraints can impact your production environment.

Using AWS Service Quotas to check quotas and request history #

AWS Service Quotas is a service that allows you to view applied and default quota values for almost all AWS services in a certain region.

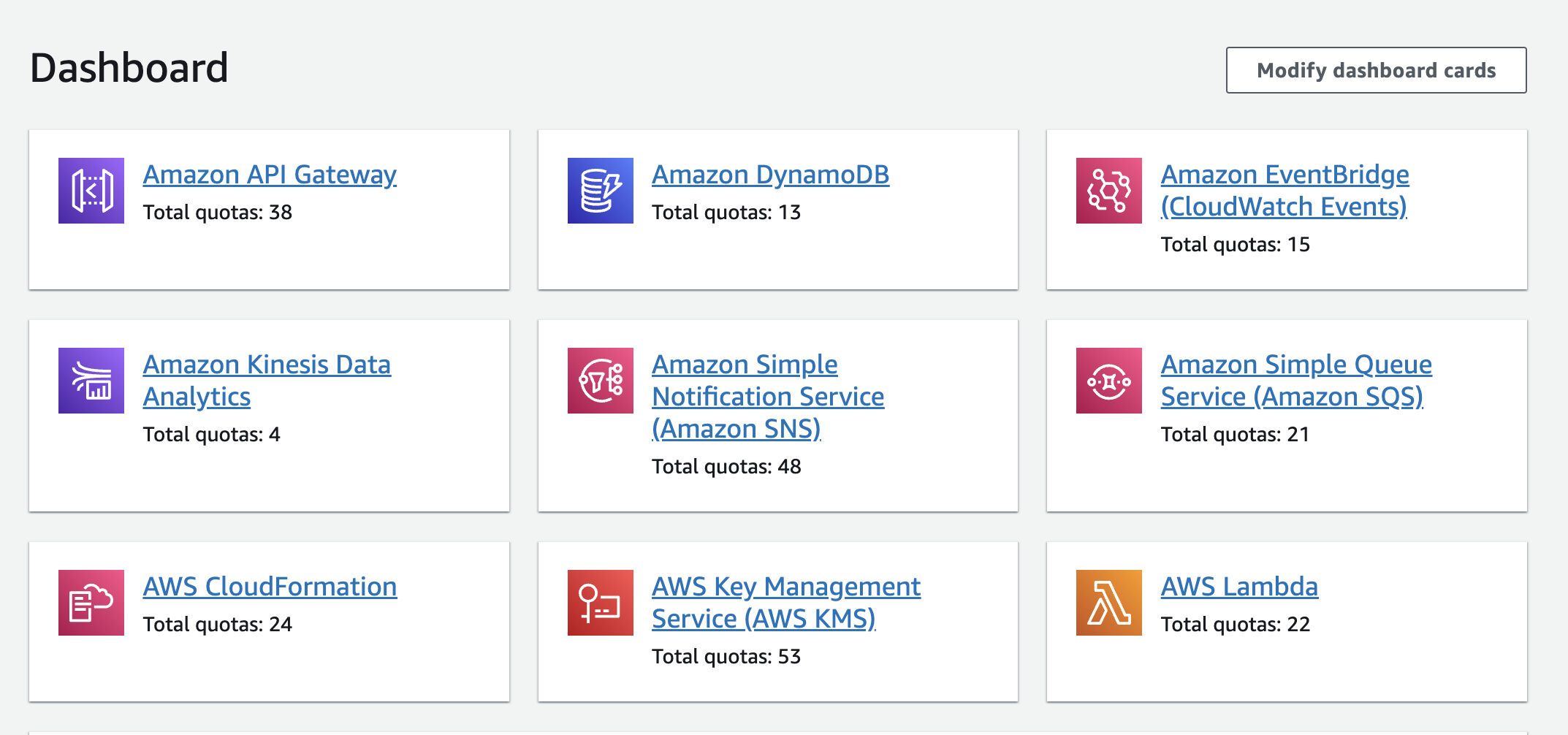

You can customize the dashboard to display services of interest.

Additionally, you can navigate services, explore quotas, request increases for adjustable quotas, and track quota request history.

This service is accessible not only through the console but also via the CLI and AWS SDK, facilitating quota management automation (refer to Best Practices).

I’ve created a lambda to automate request Quotas increase. You can find the code here.

In the following snippet you can see how the to the request to perform service quotas increase looks like.

import {

ServiceQuotasClient,

RequestServiceQuotaIncreaseCommand

} from "@aws-sdk/client-service-quotas";

export const requestServiceQuotaIncrease = async (

quotaCode: string,

serviceCode: string,

desiredValue: number

) => {

const config = {};

const client = new ServiceQuotasClient(config);

const input = {

// RequestServiceQuotaIncreaseRequest

ServiceCode: serviceCode, // required

QuotaCode: quotaCode, // required

DesiredValue: desiredValue, // required

};

const command = new RequestServiceQuotaIncreaseCommand(input);

const response = await client.send(command);

return response;

};

In order to make the request, you need to know:

- serviceCode: the identifier of the related service for which you want to request a quota increase.

- quotaCode: the identifier of the quota for which you want to request an increase.

- desiredValue: the desired value you would like to set for the quota.

To obtain the serviceCode value for an Amazon Web Services service, you can use the ListServices operation. In order to select the service code, you need to know the service name.

To find the quotaCode for a specific quota, you can use the ListServiceQuotas operation. In order to select the service code, you need to know the service name.

Moreover, both the operations return a paginated result. Look at the gitHub repo to see how I managed to retrieve serviceCode and quotaCode for a service and quota of interest.

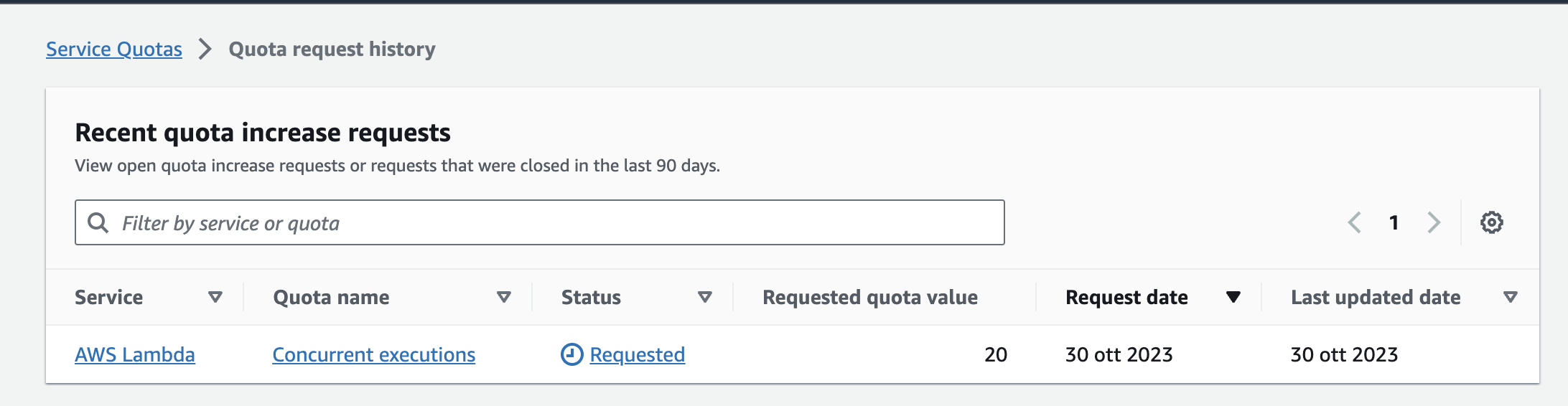

I have tried to increase Lambda Concurrent Executions service quota.

Lambda Concurrent Executions is the maximum number of events that functions can process simultaneously in the current region. The AWS default quota value is 1000 but when you create a new account you will see that the Applied quota value is 10. AWS will automatically increase the value based on the usage, until 1000. After that you have to make a request.

To make sure my increase request would be accepted, I’ve tried to increase the applied quota from 10 to 20.

Based on my solution, I have triggered the lambda with the following input parameters:

{

serviceName: 'AWS Lambda',

quotaName: 'Concurrent executions',

desiredValue: 20

}

The request returns 200 and I could see under AWS service Quotas > Quota request history page that a new request was submitted.

Recognizing that some quotas and constraints can impact your architectural decisions #

I am going to give an example of quota that could impact your architectural decisions. In the design phase, when you’re developing an Event-driven architecture and considering using SQS, not be aware of SQS quotas could be crucial. For instance, the message payload limit of 256 KiB and you are not aware of it. Without this knowledge, you may start development only to realize during testing that SQS cannot handle your message payloads. Or worse, you might realize that only when your new feature is in production, necessitating a rollback to square one.

Understanding that some quotas and constraints can impact your production environment #

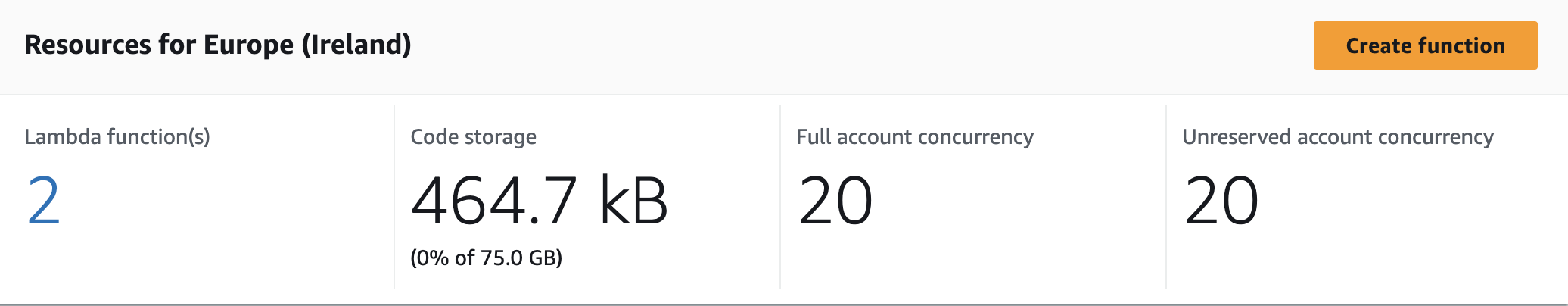

Certain services can reach their quotas over an extended period. Each lambda function version and layer version consumes storage. The Lambda Function and layer storage quota represent the available storage for deployment packages and layer archives in the current Region. As you deploy more functions and updates, storage consumption increases.

If you reach the 75 gigabyte limit, you won’t be able to deploy any more lambda functions. Therefore, monitoring this quota is of utmost importance.

Monitor and manage Lambda quotas #

If you’re working with a serverless paradigm, then you’re likely using Lambda. Let’s explore how to effectively monitor and manage Lambda quotas.

While it may not be necessary to monitor all Lambda quotas, understanding them is crucial during the design phase and when selecting services. For example, consider the synchronous payload quota, representing the maximum size of incoming synchronous invocation requests or outgoing responses. While you may not actively monitor this quota, it’s essential to be aware of its limitations, especially if your requests or responses may exceed 6MB.

Now, let’s focus on two critical quotas: Concurrent Execution and Function and layer storage.

Concurrent Execution #

As mentioned earlier in the Using AWS Service Quotas to check quotas and request history section, we’ve already discussed what Concurrent Execution is and how to request a quota increase.

So, how do we know when it’s time to make that request?

What we didn’t cover previously is that if Concurrent Execution exceeds the limit, you won’t be able to run any more lambdas, potentially heavily impacting your production environment.

To monitor this, we’ll create a CloudWatch alarm.

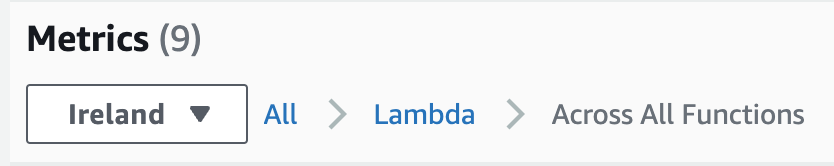

In the AWS console, navigate to CloudWatch and go to All Alarms. Click on Create Alarm and proceed to specify the metric and conditions. Under the Browse tab, select Lambda and then Across all functions. Choose ConcurrentExecutions as the metric.

Under Conditions, select Greater and set the threshold value to 800. Given that the AWS default value is 1000, setting the threshold at 800 ensures there’s a sufficient gap between current quotas and maximum usage to accommodate failover.

Function and layer storage #

The Function and Layer Storage Lambda quota refers to the amount of storage available for deployment packages and layer archives in the current Region. The AWS default quota is 75 gigabytes. If you reach this limit, you won’t be able to create any new lambdas or deploy updates.

Since this is not a metric, we’ll use the AWS SDK to monitor the quota.

You Retrieve details about your account’s limits and usage in a specific Region using GetAccountSettingsCommand LambdaClient Operation.

import {

LambdaClient,

GetAccountSettingsCommand,

GetAccountSettingsCommandOutput,

AccountLimit,

AccountUsage,

} from "@aws-sdk/client-lambda";

export const getAccountSettings = async () => {

const config = {};

const client = new LambdaClient(config);

const input = {};

const command = new GetAccountSettingsCommand(input);

const accountSettings = await client.send(command);

return accountSettings;

};

Here how the response looks like:

{

AccountLimit: {

TotalCodeSize: Number("long"),

CodeSizeUnzipped: Number("long"),

CodeSizeZipped: Number("long"),

ConcurrentExecutions: Number("int"),

UnreservedConcurrentExecutions: Number("int"),

}

AccountUsage: {

TotalCodeSize: Number("long"),

FunctionCount: Number("long"),

}

}

At this point you calculate the usage percentage by dividing:

accountUsage.TotalCodeSize / accountLimit.TotalCodeSize

If the percentage is close to maximum usage, you can send a notification to an SNS topic for actions like sending an email or triggering a lambda to notify via Slack or similar channels. You could even automate the quota increase request as demonstrated in Using AWS Service Quotas to check quotas and request history section.

I have created a lambda that monitor the storage. You can find the code here.

Conclusion #

In this article, we’ve explored the six best practices related to the first Reliability pillar question: How do you manage Service Quotas and constraints?

Being aware of these best practices and actively monitoring them using AWS service quotas, AWS CloudWatch alarms, and AWS SDK for quota automation is the first step towards ensuring a robust and reliable serverless architecture.

Finally, we’ve used AWS Lambda service quotas as an example to demonstrate both the creation of a CloudWatch alarm and the use of the AWS SDK to manage and monitor quotas.

If you have questions or would like to provide feedback, email me at martina.theindiecoder@gmail.com 🚀

That’s all folks! See you later.

Resources #

- Manage service quotas and constraints

- REL01-BP01

- REL01-BP02

- REL01-BP03

- REL01-BP04

- REL01-BP05

- REL01-BP06

Stack used: